Recently I’ve participated to a training on a « well known » DRM technoloy in streaming area. Even if the training itself was very interesting I was even more interested by a setup of an MPEG-DASH demo made by the people who hosted the event: The Fraunhofer Institute.

The demonstration was made with 4 Rasberry Pi hardware. 1 for video capture using the camera module and 3 others for transcoding.

FOKUS Live transcoding Raspberry Pi DEMO in MPEG-DASH

The low-latency resulting streams (about 15 seconds) was due to the fact that the encoding was made directly by the Broadcom GPU BCM2835.

That’s what made me decide to test these encoding capabilities and see what can be achieved out of this cheap small box. And guess what? 1 week after the new Raspberry Pi 2 was released with 4 cores and 1GB RAM! Time to play arrived.

I bought one of these shy RPI2 boxes and started to play with an RTSP stream and Wowza embedded on the board.

So here is the setup:

- Raspberry PI 2

- Installed Raspbian from here : http://www.raspberrypi.org/downloads/

- GStreamer for the transcoding: apt-get update ; apt-get install gstreamer0.10-tools

- And finally Wowza 4.1.1 debian package : http://www.wowza.com/downloads/WowzaStreamingEngine-4-1-1/WowzaStreamingEngine-4.1.1.deb.bin

Now let’s have a look at the workflow:

Camera IP -> Transcoding X3 -> Wowza Input streams -> Multi bitrate (like MPEG-DASH) client streaming

This is usually achieved by using directly Wowza Transcoder on a 64 bit SisC machine. But we are actually running on ARM (RisC) so the transcoder add-on is not available.

That’s why GStreamer is our friend. It’s modular, open-source, and has the omxh264enc module which is a free implementation of the OpenMAX hardware GPU interface.

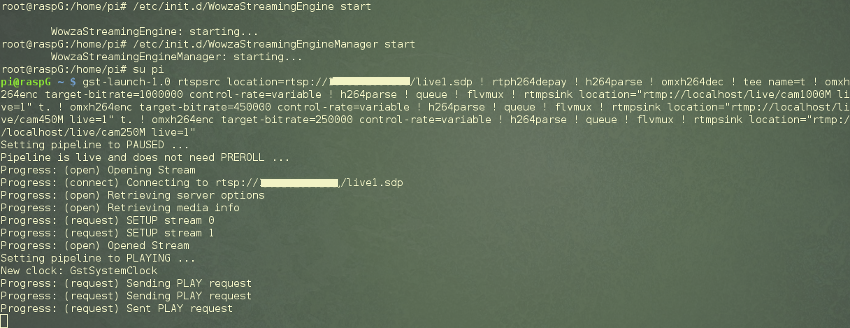

The pipeline syntax is a bit long because we need to tee the decoded input source to 3 encoding pipe:

gst-launch-1.0 rtspsrc location=rtsp://1X.XXX.XXX.X8/live1.sdp ! rtph264depay ! h264parse ! omxh264dec ! tee name=t ! omxh264enc target-bitrate=1000000 control-rate=variable ! h264parse ! queue ! flvmux ! rtmpsink location= »rtmp://localhost/live/cam1000M live=1″ t. ! omxh264enc target-bitrate=450000 control-rate=variable ! h264parse ! queue ! flvmux ! rtmpsink location= »rtmp://localhost/live/cam450M live=1″ t. ! omxh264enc target-bitrate=250000 control-rate=variable ! h264parse ! queue ! flvmux ! rtmpsink location= »rtmp://localhost/live/cam250M live=1″

GStreamer transcoding pipeline

For this example and because we are sending the data to the localhost I’ve disabled RTMP security on the Wowza.

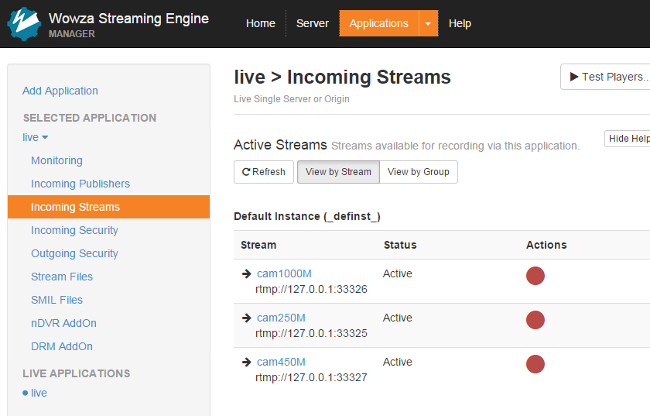

So here are the 3 input streams on the live application:

Wowza incoming streams

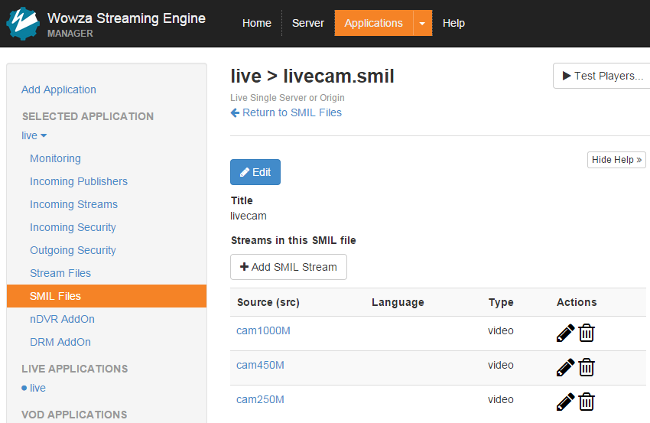

Then we just add the streams to a SMIL file to configure the adaptative bitrate:

SMIL file for ABR

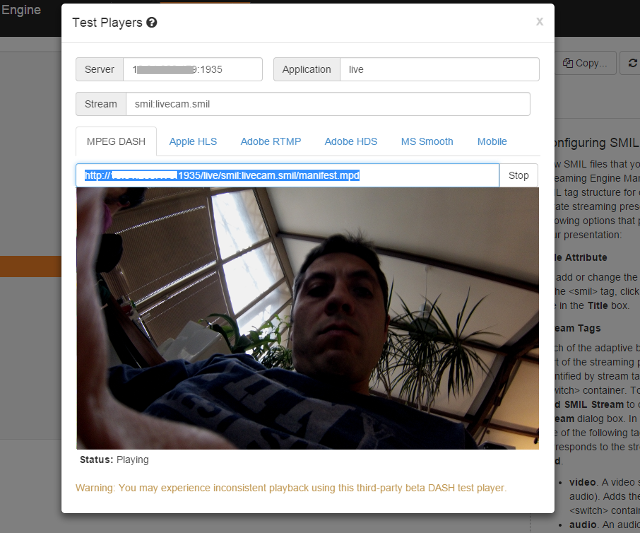

And there we go:

MPEG-DASH streaming

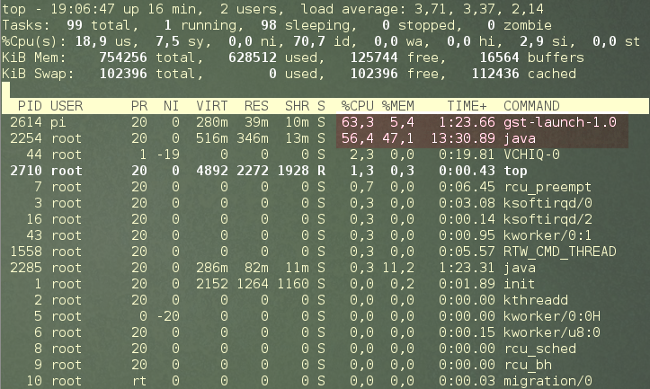

Regarding performance the use of omxh264 for the transcoding allow the limitation of CPU usage. For 3 streams transcoding at Main L3 the gst-launch pipeline is using about 60% CPU leaving the hardwork to the GPU.

As you can see Java is eating also about 50-60% so it might be useful to stop the StreamingEngineManager and take care about micro-SD I/O exchanges by mounting another local disk for logging.

Gérard Maulino